Your p2p demo runs locally. Now what?

You've built a peer-to-peer (p2p) demo that hums along on your local machine. Peers connect, messages flow, and for a moment, you're basking in localhost bliss. Then comes the inevitable question: how do you migrate this from your laptop to the cloud?

Enter deployer, a CLI and library that bridges the gap between localhost and remote host. Roll custom binaries and configurations to instances in multiple regions, configure networking policies that allow peers to talk to each other, automatically collect metrics and logs, and clean up when you're done.

Spend your time coding, not tweaking CIDR blocks.

From Localhost to the Cloud

Deploying a p2p application isn't as straightforward as spinning up a web server. You'll need to reckon with:

- Networking: How do your nodes discover each other across regions?

- Deployment: How do you push unique binaries and configs to each instance?

- Observability: When a node in Brazil starts acting up, how do you know?

- Cleanup: When you're done, how do you ensure you don't leave a mess behind?

You could spend weeks configuring VPC peering, wrestling with IAM roles, and praying that your Grafana dashboard actually shows something. Or, you could use deployer.

Introducing deployer

deployer is your one-stop shop for deploying p2p applications in the cloud. It's both a CLI for quick wins and a Rust library when you need to get custom. Think of it like a high-level abstraction over infrastructure APIs with sane defaults and observability built-in.

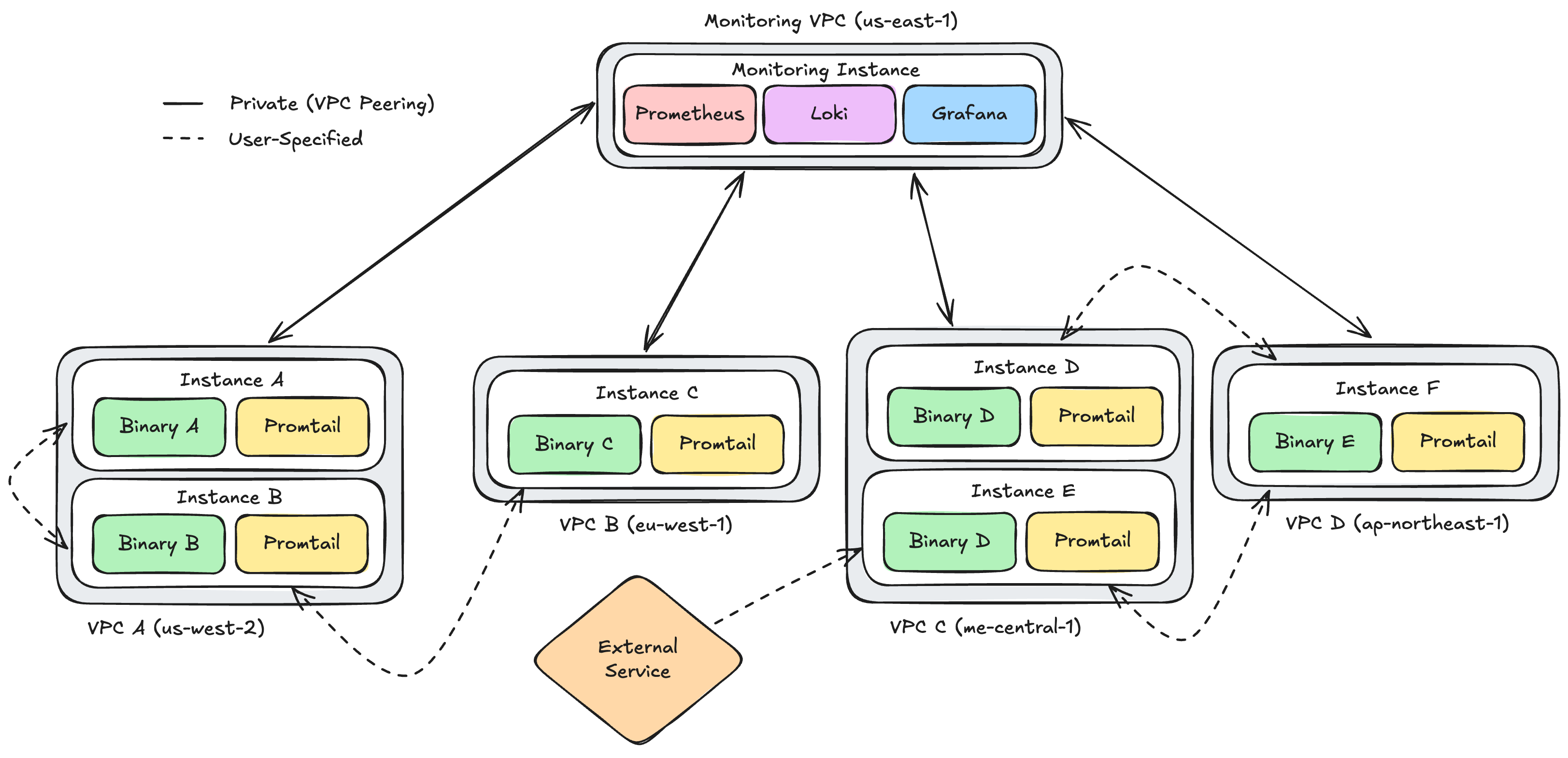

The first deployer dialect, deployer::ec2, is focused on reproducible benchmarking. With a single YAML config, it performs:

- Automated Provisioning: Spins up EC2 instances in multiple regions, sets up VPCs, internet gateways, route tables, subnets, security groups, SSH keys, and connects everything together with VPC peering.

- Code Deployment: Uploads custom binaries and configs (specified uniquely for each instance), installs everything as a systemd service, and starts everything up. Pushes updates in-place.

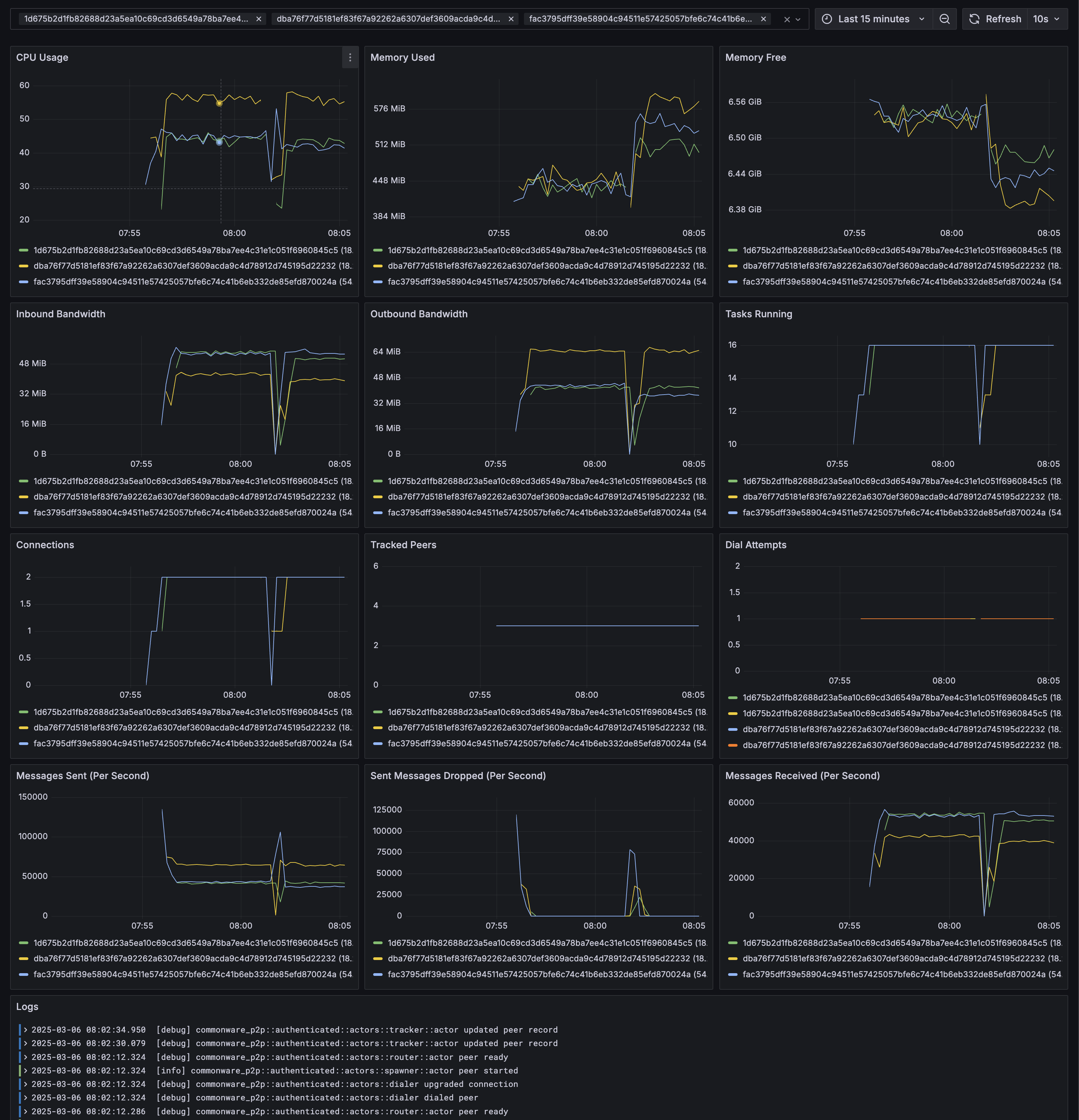

- Configured Monitoring: Launches a dedicated monitoring instance (running Prometheus, Grafana, and Loki) configured to ingest data from all deployed instances.

- 1-Command Cleanup: Destroys everything when you're done, protecting you from surprise charges from that box you forgot to shutdown.

Try it Out: flood

To demonstrate how to use deployer, we build a p2p::authenticated benchmarking tool called flood. flood does one thing: spam peers with as many random messages as possible.

flood leverages deployer to transform a local stress test into a global one. Here's how it works:

- Setup: The setup binary (included in the flood crate) generates a deployer::ec2 config.yaml file and peer-specific configs from user-specified peer, bootstrapper, region, and performance parameters (like message size and message backlog).

- Compile: flood uses Docker to compile the flood binary for ARM64 (deployer::ec2 uses Graviton EC2 instances).

- Deploy: deployer ec2 create spins up instances across regions, wires them together, and starts the flood binary on each peer. The custom Grafana dashboard is started at http://monitoring-ip:3000/d/flood.

- Tweak: deployer ec2 update deploys new binaries and configs on each peer.

- Cleanup: deployer ec2 destroy tears down all provisioned infrastructure.

Step through the full walkthrough in the

A local demo is a proof of concept; deployer::ec2 makes it a proving ground.