Deliver Us in Pieces

Validators on most blockchain networks are overprovisioned for bandwidth when they are a leader and underutilized the rest of the time.

In a "standard" blockchain (akin to marshal::standard over broadcast::buffered), the proposer pushes the whole block to each validator. Even on multi-Gbps links, distributing a block this way can take hundreds of milliseconds. Meanwhile, validators waiting for their block have ample bandwidth (for when they are a proposer) but are doing nothing with it when not. What if they could help?

Today, we are introducing a new marshal dialect: marshal::coding. A leader commits to an erasure-coded block, validators verify uniquely constructed shards and relay to other validators. All without slowing down consensus::simplex (which supports safely voting for a block before it is fully reconstructed).

Certification: A Second Decision Point

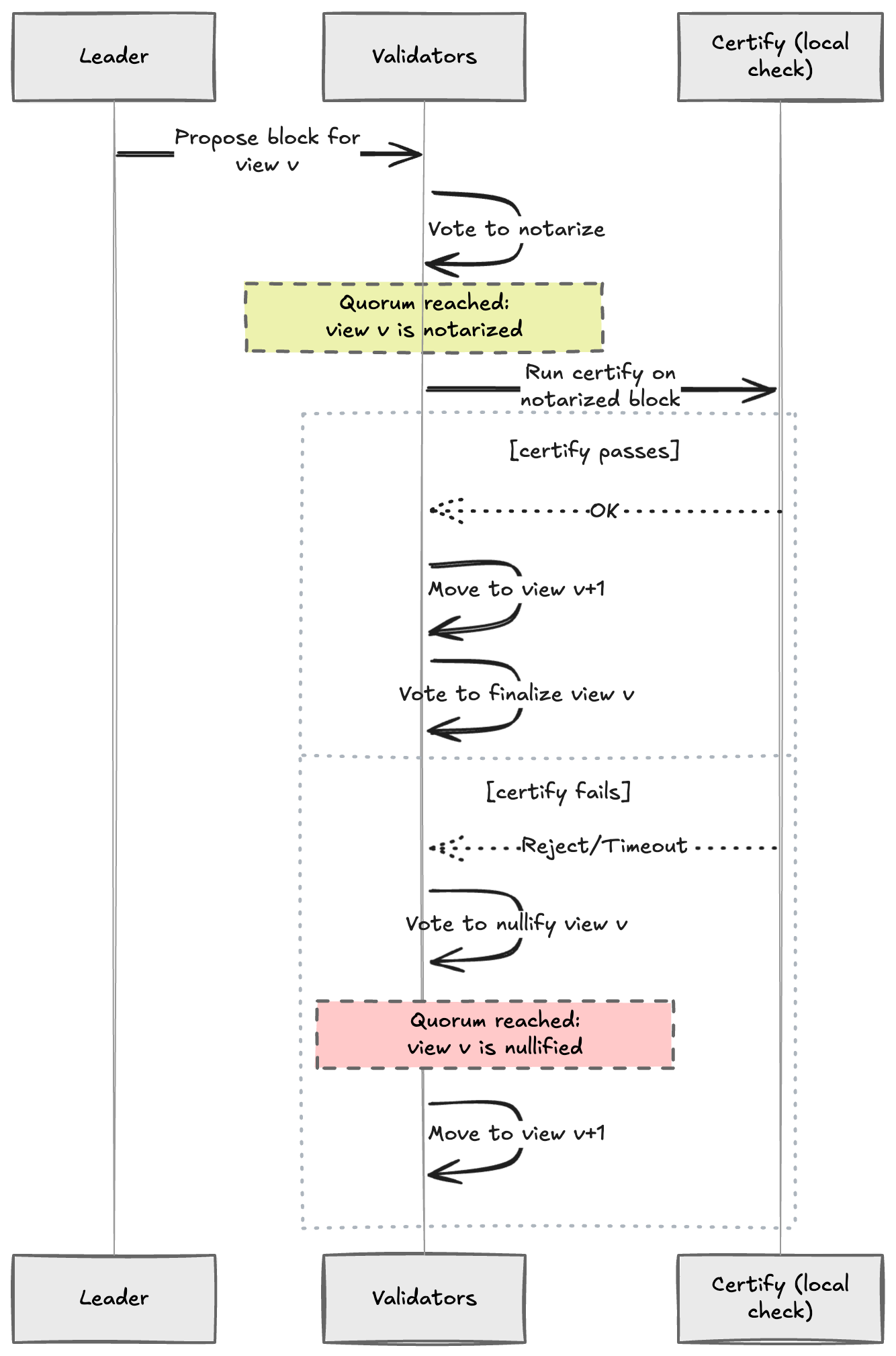

The foundation of this integration is a new phase in a view's progression to finalization within consensus::simplex. "Certification" offers a local, deterministic method to nullify a view after voting on partial information.

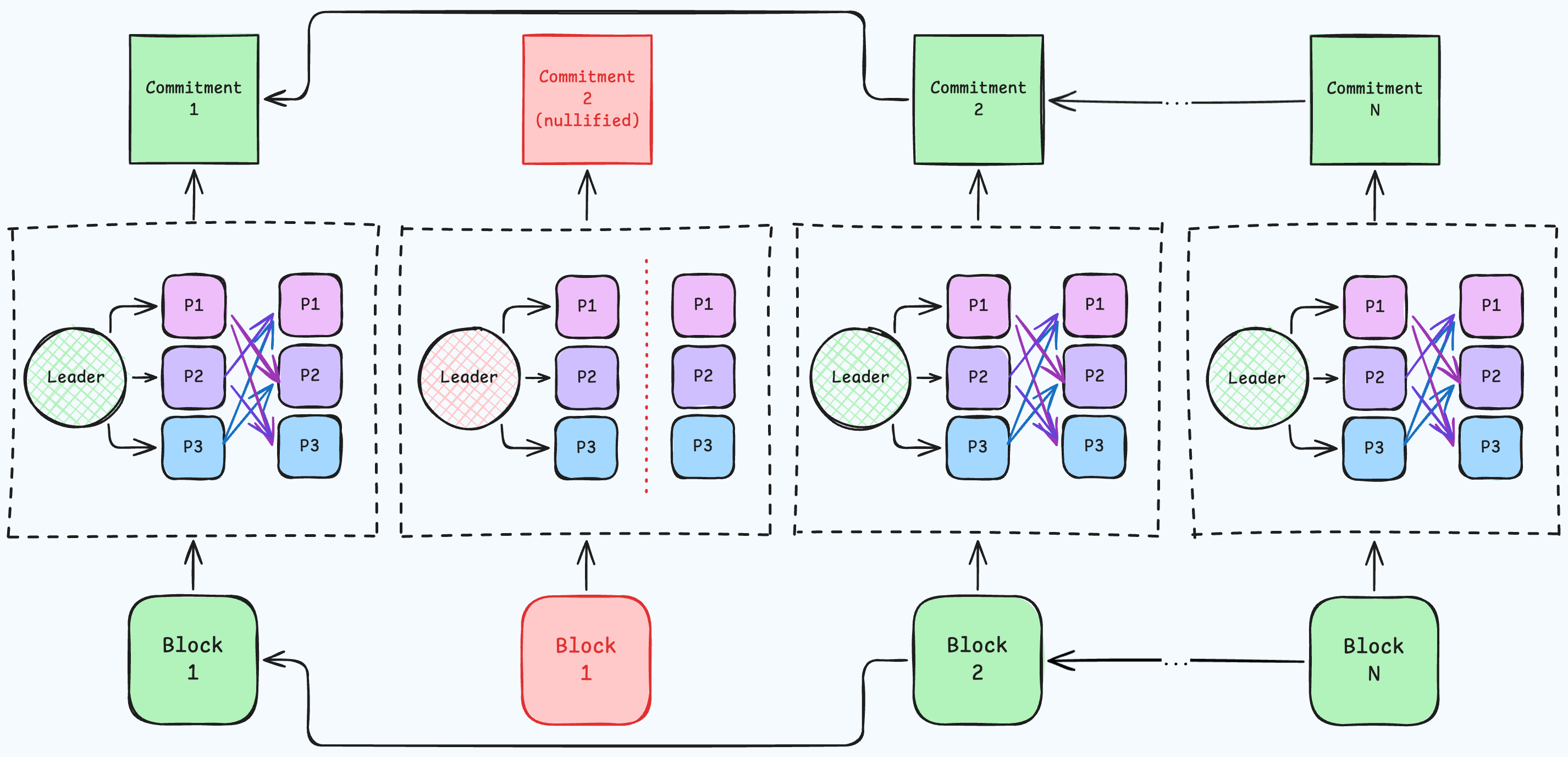

A notarization proves that a quorum observed the same commitment. It does not force replicas to keep going down that path. After notarization forms, the network can still change course (ensuring that invalid data is not enshrined in the canonical chain).

If certify returns true, the validator advances to the next view and then votes to finalize the certified view. If certify returns false, the validator votes to nullify and refuses to build on that payload. In other words: notarization says “we saw it,” certification decides “we will build on it.”

Why This Matters for Coding

In the coding path, this boundary improves view latency by changing when validators can vote.

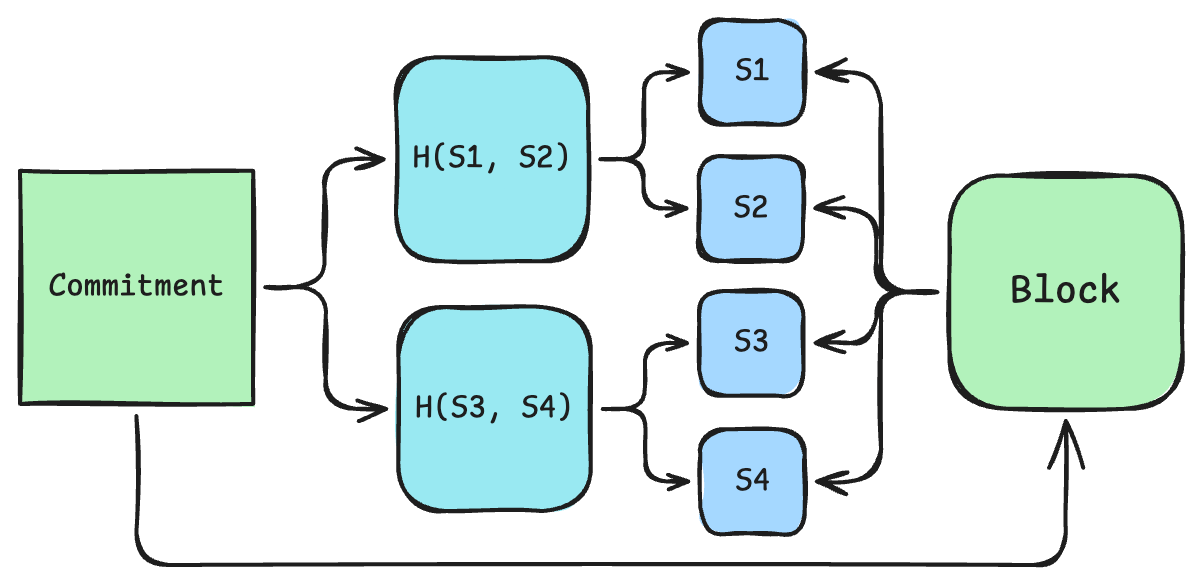

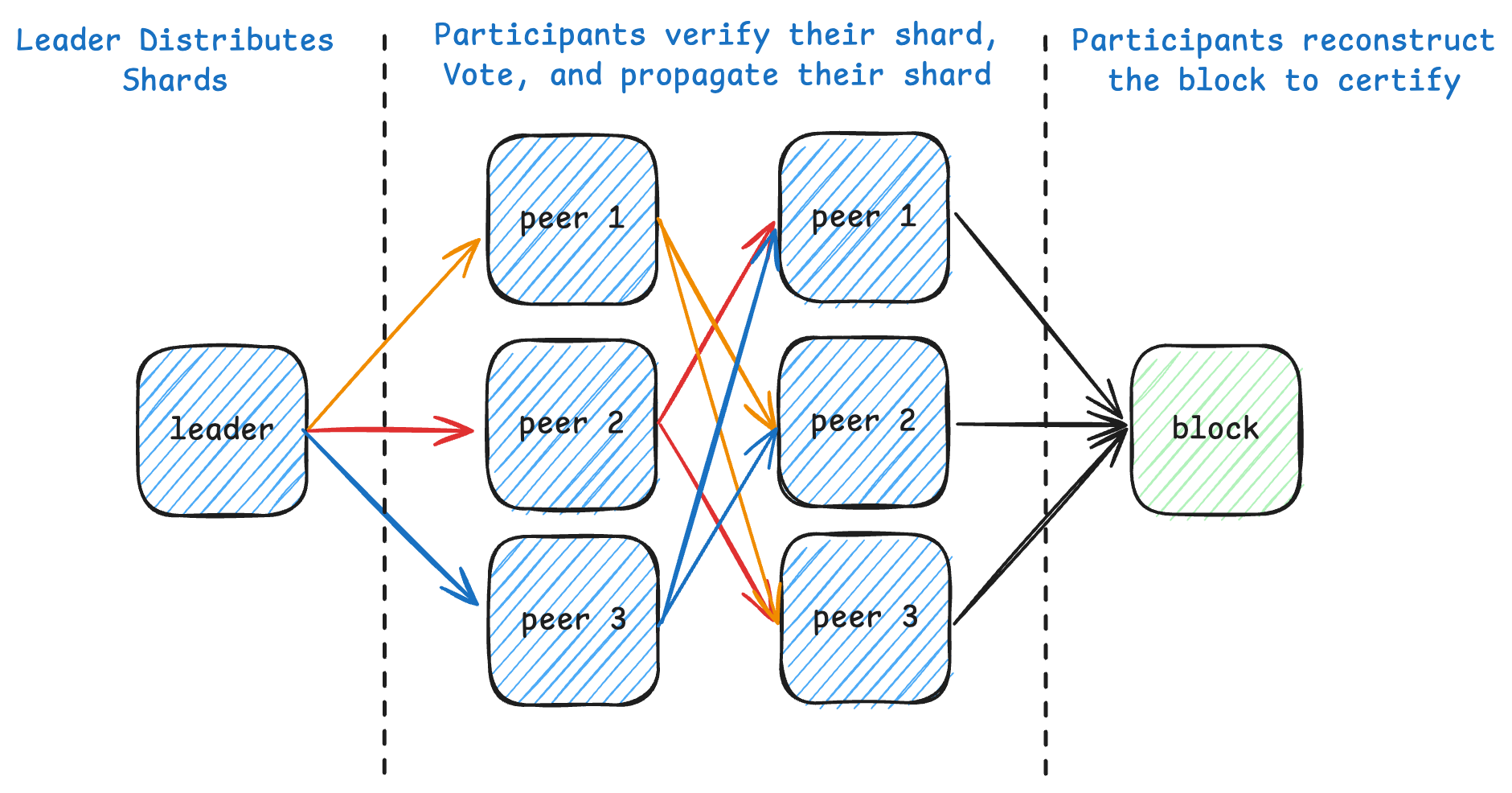

The proposer first erasure-encodes their block and commits to all shards to form a consensus payload (the commitment). Then, they disperse both a shard as well as an inclusion proof to the validators (a method described in Dispersed Ledger). Upon receipt, validators verify proposal invariants and shard validity against the erasure-coding commitment, relay their shard, and vote to notarize. Full block reconstruction is deferred to certify.

This is closest in spirit to DispersedSimplex: notarize the commitment first, recover the block later, then gate finalization on local certification. We differ from other stacks that employ erasure-coded broadcast (such as RaptorCast + MonadBFT) which typically wait for full block recovery before a validator can vote (increasing view latency and thus transaction latency).

After notarization, certify is the first point where the full block is required: we reconstruct it, check ancestry and epoch invariants, and run full application verification. If reconstruction fails or the reconstructed blob is invalid, certification fails and the view is nullified cleanly - no bad blocks end up in the finalized chain.

Out-of-the-Box Performance (If You Want It)

Applications do not need to be rewritten to use this feature. If you already implement the Application/VerifyingApplication interfaces, wrap the same application with marshal::coding and get erasure-coded dissemination automatically.

Interested in upgrading to marshal::coding at some point but not quite ready yet? We continue to support marshal::standard::Inline (all you need is a height and a parent digest) and marshal::standard::Deferred (if your block stores the consensus context, we can defer the verification check until certify).

Alto Benchmarks

For global Alto benchmarks, we deploy 50 validators uniformly across ap-northeast-1, ap-northeast-2, ap-south-1, ap-southeast-2, eu-central-1, eu-north-1, eu-west-1, sa-east-1, us-east-1, and us-west-1. Each validator runs on a c8g.2xlarge instance (8 vcpus, 4 dedicated to tokio and 4 dedicated to a rayon thread pool used for parallel hashing and signature verification).

The first experiment fixes block size at 4MiB to emphasize low view latency. In this configuration, coding marshal sustains about 14Mb/s while keeping average view latency around 300ms.

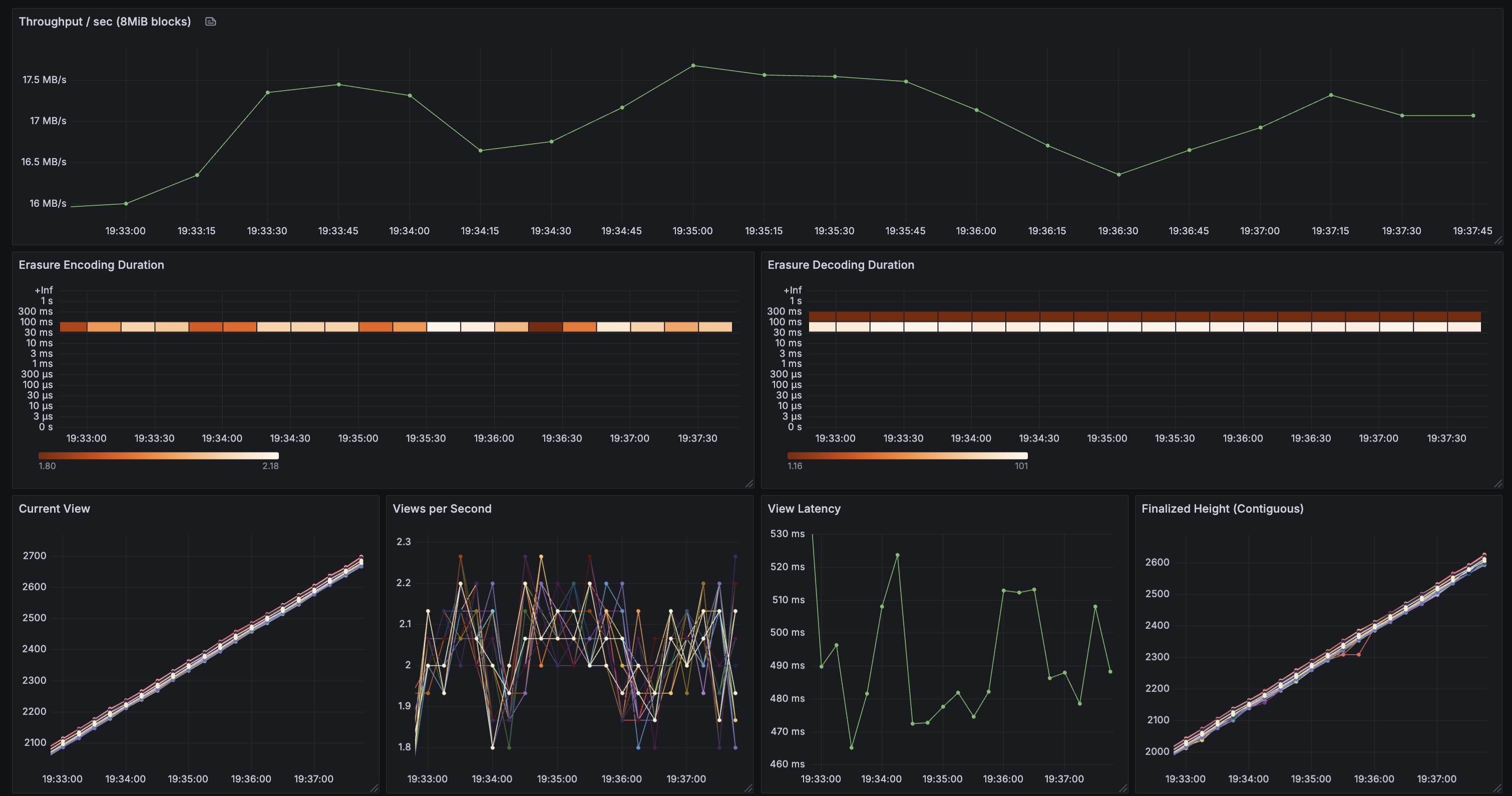

coding::reed_solomon and cryptography::blake3, achieving ~14Mb/s throughput and ~300ms view latency with statically sized 4MiB blocksThe second experiment increases block size to 8MiB to push throughput. Throughput rises to about 17Mb/s while view latency stays below 500ms, showing that larger blocks remain practical on the same global topology.

coding::reed_solomon and cryptography::blake3, achieving ~17Mb/s throughput and <500ms view latency with statically sized 8MiB blocksWhat Comes Next

Delivering blocks in pieces is one part of a larger roadmap toward faster and more composable consensus deployments. marshal::coding is now in ALPHA, and will be included in our next release.

Can you have too much blockspace?